44 soft labels deep learning

Pseudo Labelling - A Guide To Semi-Supervised Learning Semi-Supervised Learning (SSL) which is a mixture of both supervised and unsupervised learning. By There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Image segmentation metrics - Keras To compute IoUs, the predictions are accumulated in a confusion matrix, weighted by sample_weight and the metric is then calculated from it.. If sample_weight is None, weights default to 1.Use sample_weight of 0 to mask values.. Note that this class first computes IoUs for all individual classes, then returns the mean of these values.

[2007.05836] Meta Soft Label Generation for Noisy Labels - arXiv.org The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion.

Soft labels deep learning

Understanding Deep Learning on Controlled Noisy Labels - Google AI Blog In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ... Softmax Classifiers Explained - PyImageSearch Inside PyImageSearch University you'll find: 45+ courses on essential computer vision, deep learning, and OpenCV topics. 45+ Certificates of Completion. 52+ hours of on-demand video. Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques. How to Develop an Ensemble of Deep Learning Models in Keras 28.8.2020 · Deep learning neural network models are highly flexible nonlinear algorithms capable of learning a near infinite number of mapping functions. A frustration with this flexibility is the high variance in a final model. The same neural network model trained on the same dataset may find one of many different possible “good enough” solutions each time […]

Soft labels deep learning. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy ... 18.8.2021 · Deep learning (DL), a branch of machine learning (ML) and artificial intelligence (AI) is nowadays considered as a core technology of today’s Fourth Industrial Revolution (4IR or Industry 4.0). Due to its learning capabilities from data, DL technology originated from artificial neural network (ANN), has become a hot topic in the context of computing, and is widely … A semi-supervised learning approach for soft labeled data Abstract: In some machine learning applications using soft labels is more useful and informative than crisp labels. Soft labels indicate the degree of membership of the training data to the given classes. Often only a small number of labeled data is available while unlabeled data is abundant. Validation of Soft Labels in Developing Deep Learning Algorithms for ... Validation of Soft Labels in Developing Deep Learning Algorithms for Detecting Lesions of Myopic Maculopathy From Optical Coherence Tomographic Images The predicted possibilities from the models trained by soft labels were close to the results made by myopia specialists. Learning to Purify Noisy Labels via Meta Soft Label Corrector Recent deep neural networks (DNNs) can easily overfit to biased training data with noisy labels. Label correction strategy is commonly used to alleviate this issue by designing a method to identity suspected noisy labels and then correct them.

COLAM: Co-Learning of Deep Neural Networks and Soft Labels via ... We study the technical problem of co-learning soft labels and deep neural network during one end-to-end training process in a self-distillation setting. We design two objectives to learn the model and the soft labels respectively, where the two objective functions depend on each other. How To Label Data For Semantic Segmentation Deep Learning Models ... Labeling the data for computer vision is challenging, as there are multiple types of techniques used to train the algorithms that can learn from data sets and predict the results. Image annotation... How to map softMax output to labels in MXNet - Stack Overflow 1. In Deep learning the predictions are often encoded using one hot vector. I am using MXNet for creating a simple Neural Network which classifies images of animals as cats,dogs,horses etc. When I call the Predict method of MXNet it returns me a softmax output. Now, how do I determine that the index of the entry in the softmax output ... Label-Free Quantification You Can Count On: A Deep Learning ... - Olympus Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right).

Learning Soft Labels via Meta Learning The learned labels continuously adapt themselves to the model's state, thereby providing dynamic regularization. When applied to the task of supervised image-classification, our method leads to consistent gains across different datasets and architectures. For instance, dynamically learned labels improve ResNet18 by 2.1% on CIFAR100. subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2019-ICML - Combating Label Noise in Deep Learning Using Abstention. 2019-ICML - SELFIE: Refurbishing unclean samples for robust deep learning. 2019-ICASSP - Learning Sound Event Classifiers from Web Audio with Noisy Labels. ... 2020-ICPR - Meta Soft Label Generation for Noisy Labels. 2020-IJCV ... github.com › Advances-in-Label-Noise-LearningGitHub - weijiaheng/Advances-in-Label-Noise-Learning: A ... Jun 15, 2022 · A Novel Perspective for Positive-Unlabeled Learning via Noisy Labels. Ensemble Learning with Manifold-Based Data Splitting for Noisy Label Correction. MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels. On the Robustness of Monte Carlo Dropout Trained with Noisy Labels. › science › articleAdversarial Attacks and Defenses in Deep Learning Mar 01, 2020 · Qi CR, Su H, Mo K, Guibas LJ. PointNet: deep learning on point sets for 3D classification and segmentation. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition; 2017 Jul 21–26; Honolulu, HI, USA; 2017. p. 652–60.

(PDF) Deep learning with noisy labels: Exploring techniques and ... In this paper, we first review the state-of-the-art in handling label noise in deep learning. Then, we review studies that have dealt with label noise in deep learning for medical image analysis....

How to make use of "soft" labels in binary classification - Quora Classification is a supervised machine learning technique in which we classify the data into several groups based on their characteristics and behavior in the past. For example, Let's consider we have 100 fruits - apples, oranges, and bananas labeled with their name along with them.

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

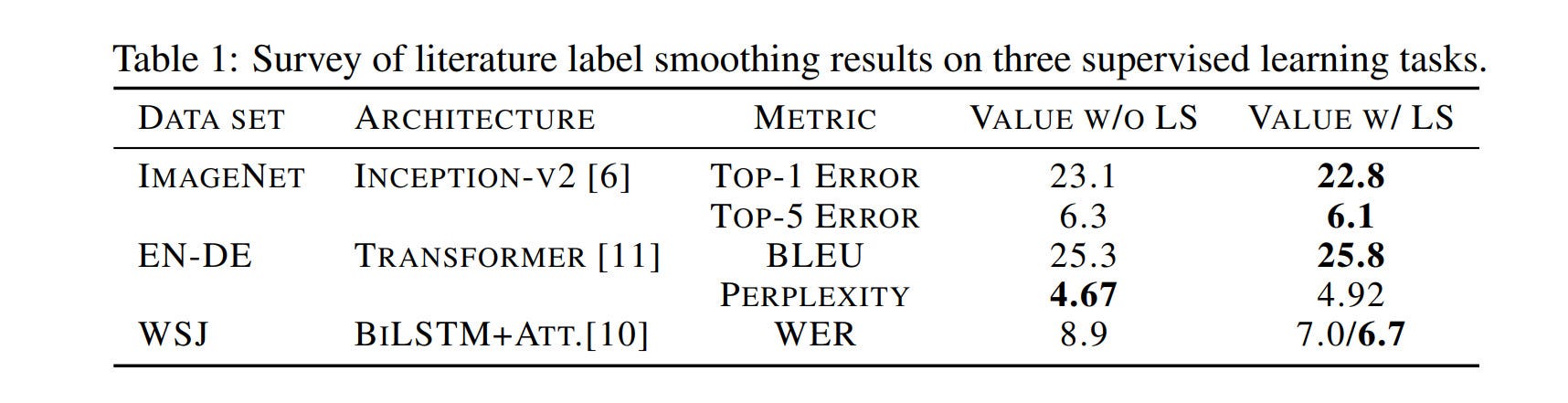

Label Smoothing: An ingredient of higher model accuracy These are soft labels, instead of hard labels, that is 0 and 1. This will ultimately give you lower loss when there is an incorrect prediction, and subsequently, your model will penalize and learn incorrectly by a slightly lesser degree.

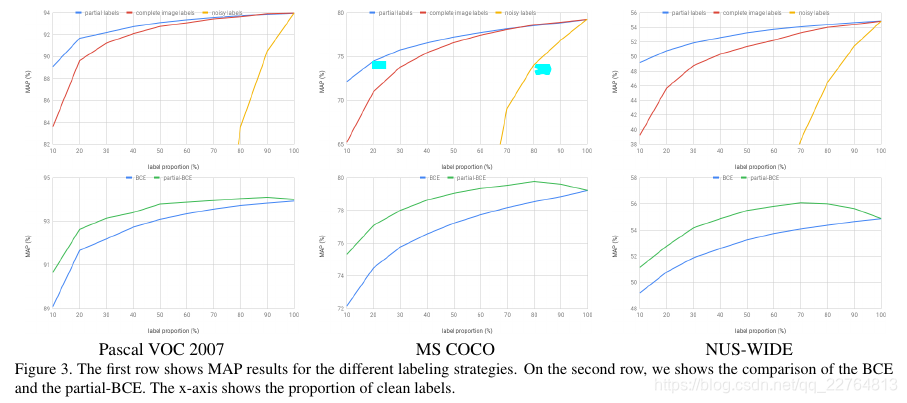

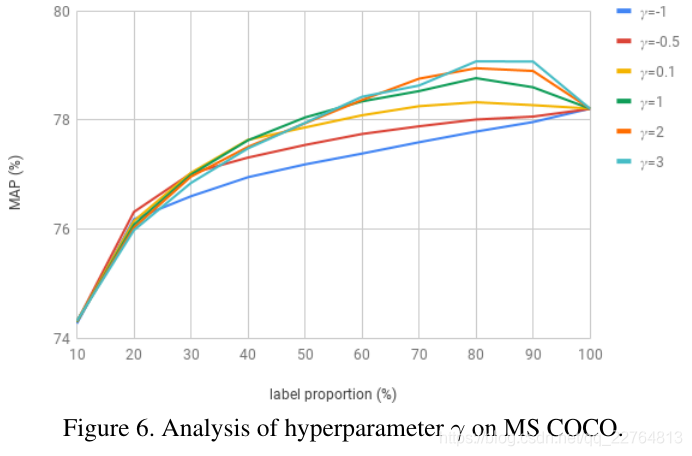

【multi-label】Learning a Deep ConvNet for Multi-label Classification with Partial Labels_猫猫与橙子的博客 ...

machinelearningmastery.com › model-averagingHow to Develop an Ensemble of Deep Learning Models in Keras Aug 28, 2020 · Deep learning neural network models are highly flexible nonlinear algorithms capable of learning a near infinite number of mapping functions. A frustration with this flexibility is the high variance in a final model. The same neural network model trained on the same dataset may find one of many different possible “good enough” solutions each time […]

Learning from Noisy Labels with Deep Neural Networks: A Survey As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning...

What is Label Smoothing?. A technique to make your model less… | by ... Label smoothing is used when the loss function is cross entropy, and the model applies the softmax function to the penultimate layer's logit vectors z to compute its output probabilities p. In this setting, the gradient of the cross entropy loss function with respect to the logits is simply ∇CE = p - y = softmax (z) - y

Deep learning model to predict complex stress and strain … 9.4.2021 · In recent years, ML methods, especially deep learning (DL), have revolutionized our perspective of designing materials, modeling physical phenomena, and predicting properties (21–26).DL algorithms developed for computer vision and natural language processing can be used to segment biomedical images (), design de novo proteins (28–30), and generate …

Data Labeling Software: Best Tools for Data Labeling - Neptune Dataturk. Dataturk is an open-source online tool that provides services primarily for labeling text, image, and video data. It simplifies the whole process by letting you upload data, collaborate with the workforce, and start tagging the data. This lets you build accurate datasets within a few hours.

【multi-label】Learning a Deep ConvNet for Multi-label Classification with Partial Labels_猫猫与橙子的博客 ...

Labelling Images - 15 Best Annotation Tools in 2022 - Folio3AI Blog Prodigy. Prodigy is a highly efficient and scriptable data annotation tool, which is very easy to use and can train an AI model in only a few hours. It has a faster data collection, a more independent approach, and is known to have a higher level of successful projects than other tools.

Unable to locate the downloaded datasets in Google Colab - Part 1 (2019) - Deep Learning Course ...

Robust Training of Deep Neural Networks with Noisy Labels by Graph ... The averaged labels are soft-labels and capture the degree of confidence of each sample belongs to a certain class. Loss Function. The proposed method trains the DNNs model with the following loss function constructed by three terms:

Speech synthesis: A review of the best text to speech architectures ... 13.5.2021 · However, in most cases, the quality of the synthesized speech is not ideal. This is where Deep Learning based ... The embeddings represent the acoustic expressiveness of different speakers and are trained with no explicit labels. In other ... Hard alignment between phonemes and their mel-spectograms in contrast to soft ...

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

Understanding Dice Loss for Crisp Boundary Detection - Medium Therefore, the range of DSC is between 0 and 1, the larger the better. Thus we can use 1-DSC as Dice loss to maximize the overlap between two sets. In boundary detection tasks, the ground truth ...

Semi-Supervised Learning With Label Propagation - Machine Learning Mastery Label Propagation Algorithm. Label Propagation is a semi-supervised learning algorithm. The algorithm was proposed in the 2002 technical report by Xiaojin Zhu and Zoubin Ghahramani titled " Learning From Labeled And Unlabeled Data With Label Propagation .". The intuition for the algorithm is that a graph is created that connects all ...

ruder.io › multi-taskAn Overview of Multi-Task Learning for Deep Learning May 29, 2017 · So far, we have focused on theoretical motivations for MTL. To make the ideas of MTL more concrete, we will now look at the two most commonly used ways to perform multi-task learning in deep neural networks. In the context of Deep Learning, multi-task learning is typically done with either hard or soft parameter sharing of hidden layers.

A deep learning system for detecting diabetic retinopathy 28.5.2021 · In previous studies, the deep learning systems were usually trained directly end-to-end from original fundus images to the labels of DR …

Post a Comment for "44 soft labels deep learning"